“We treated trust as a design principle from day one”

We recently shared how we redesigned CX Score to deliver deeper, more actionable insights across every conversation. The most common follow-up from support leaders was the one that matters most: “Can I trust it?”

It’s the right question.

CS teams are the subject matter experts on customer experience. They understand the nuance of what customers feel, the context behind every interaction, the difference between a technically resolved issue and a genuinely satisfied customer. Until now, there hasn’t been a metric that could reliably match that expertise at scale.

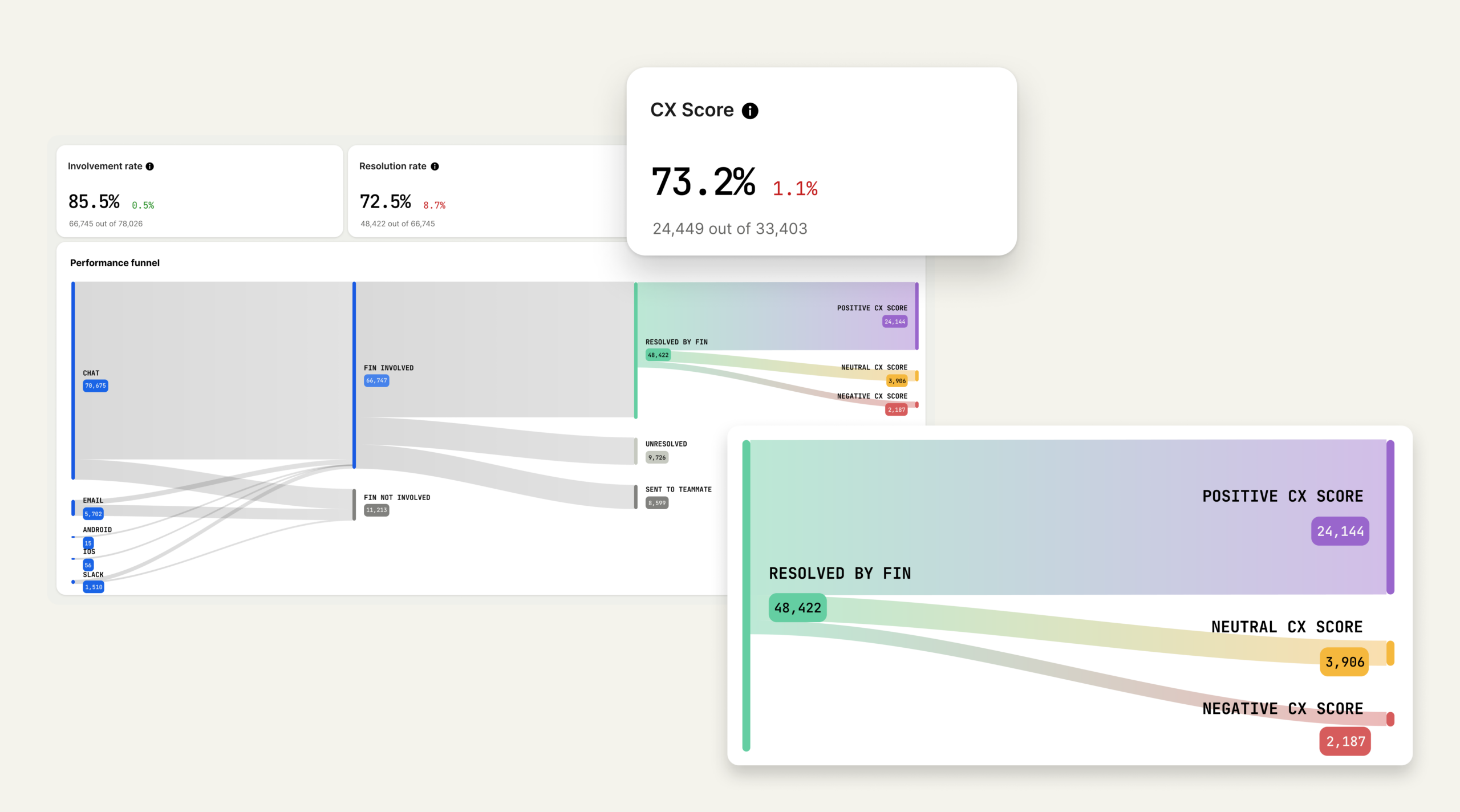

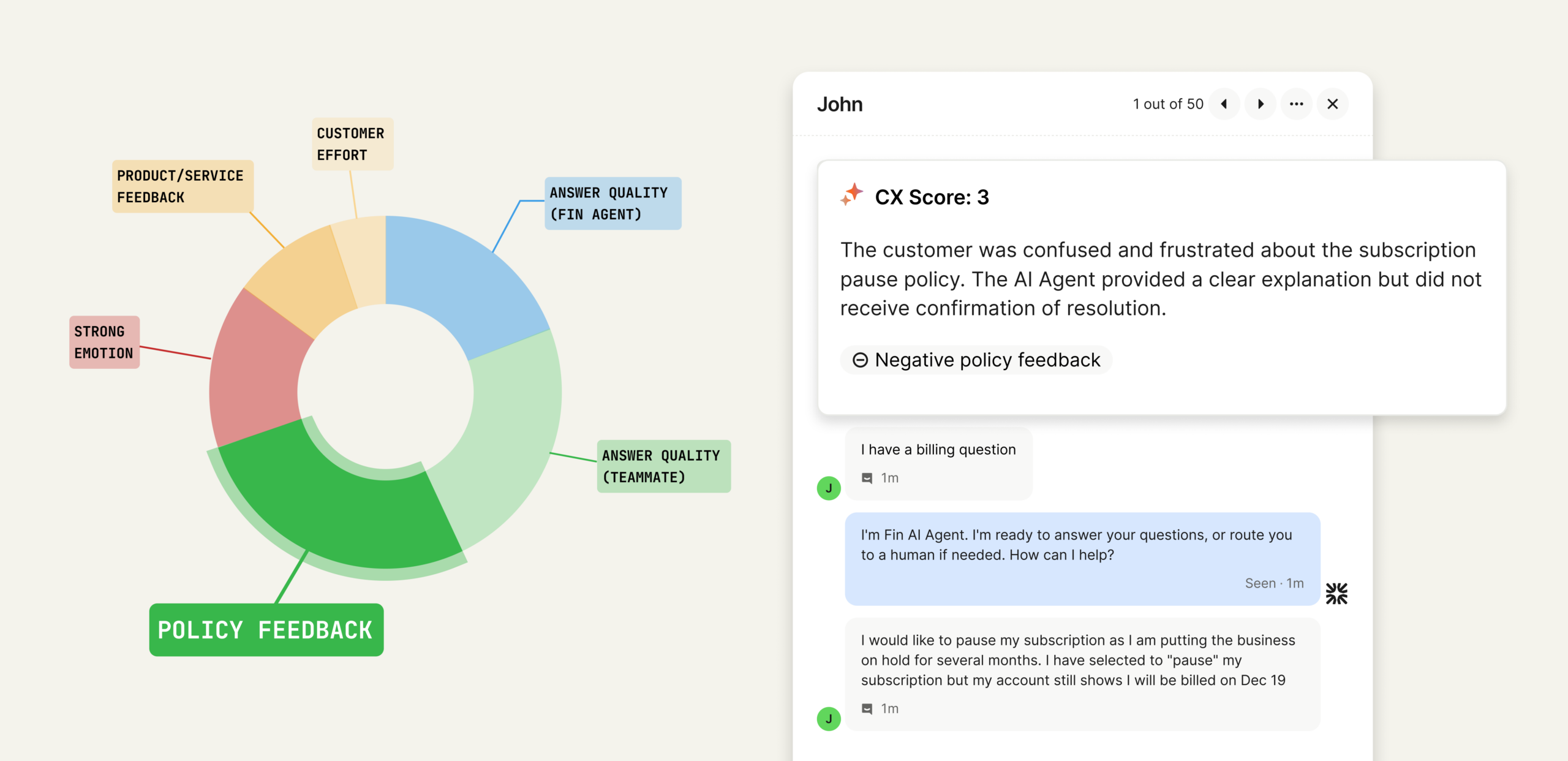

We built CX Score to give support teams a complete view of the customer experience across every conversation. It surfaces what’s working, what’s not, and why—so leaders can communicate impact clearly and drive change across support, product, and the wider business.

Five steps to building a trustworthy metric

We treated trust as a design principle from day one—starting with how support professionals actually evaluate conversations, validating every decision against human judgment, and creating a system transparent enough to inspect and defend.

1. It’s grounded in how support teams define quality

We started with how experienced support professionals actually evaluate conversations—collecting real examples of strong, mixed, and poor interactions across industries, identifying the specific factors that shape overall experience, and writing plain-English rules for each.

The result: CX Score applies the same criteria a trained support professional would use, not generic LLM assumptions.

2. It’s aligned with human judgment

We created a dataset of thousands of real customer conversations spanning multiple industries, languages, channels, and agent types. Each was manually reviewed by experienced support professionals—with two reviewers per conversation where possible and disagreement resolution to create stable consensus labels.

The result: CX Score is trained and tested to behave like an expert reviewer, not a language model making broad guesses.

3. It’s engineered by AI specialists

CX Score is more than a single prompt. It’s a production system with specialized prompt engineering, infrastructure for scale, and a rigorous evaluation pipeline. It’s built and maintained by Intercom’s AI Group.

The systems model configuration is fine-tuned for support language and subtle sentiment, and it was rigorously tested across many different LLM models. It uses specialized prompt engineering to conservatively evaluate individual quality signals, defaulting them to neutral when evidence is ambiguous, and subjects every model revision to a multi-stage evaluation process that measures precision, recall, and agreement with human judgment before release.

The result: A metric built by a team that understands LLM behavior in production support environments, where accuracy and consistency matter most.

4. It’s validated statistically and qualitatively

Trust requires measurement, not vibes.

We tested CX Score across standard ML metrics: Precision (when the model flags a negative experience, how often do humans agree?), recall (how many human-identified issues does it catch?), and F1 score (the balance between both).

We set an explicit bar: F1 above 0.8, representing high agreement with human judgment. We reran these evaluations through every revision, checking for regressions or biases. And we focused especially on negative experiences, because a false negative hides a real problem.

The result: CX Score meets a measurable standard before it ships—not a gut check, a statistical requirement.

5. It was battle-tested with real customers

Lab accuracy isn’t enough. Customer environments are messy: Varied ticket types, mixed languages, unpredictable edge cases.

Before release, we ran a multi-phase field test—shadow-scoring conversations with both old and new models, validating sensible behavior across agent type and conversation length, then rolling out to a controlled customer group who confirmed the scores felt right, reasons were clear, and insights were actionable.

The result: CX Score shipped because real teams told us it made sense in practice, not because it passed internal tests.

The takeaway

Support leaders asked the right question: “Can I trust it?” CX Score is designed to earn that trust — because it was built and validated as a metric you can inspect and defend, not a black-box model output.

It’s grounded in how support teams define quality, aligned to expert human review, engineered for consistency in production, held to a clear evaluation bar, and battle-tested with real customers. If you’ve ever had to justify CS metrics to your exec team, CX Score is designed to be the single source of truth you can stand behind.

Want to see CX Score in your workspace? Get started →

This is the second post in our CX Score series. Missed the first one? Read how CX Score evolved to provide deeper, more actionable insights →